If you’ve ever designed or are currently designing a survey, you’ll know how challenging it can be to write survey questions that effectively help answer your research objectives, that are easy to understand and that are unbiased. It’s a real craft that takes practice to master. To make this easier, we have compiled a list of eight best practices for writing effective survey questions. While this list isn’t exhaustive, it’s a good starting point.

Make sure every question counts

The more questions you add to your survey, the less likely respondents are to complete it entirely. At Bunnyfoot, we aim to keep surveys within a 10-12 minute limit to maintain engagement. Before finalising your survey, review each question and ask yourself, “How will this answer support my research objectives?” Consider removing any questions that aren’t essential.

Be clear and concise

Use simple, direct language and avoid jargon, acronyms, or technical terms that could confuse respondents. The goal is to make your questions easy to read and understand at a glance.

An example:

Avoid leading survey questions

Leading questions push respondents towards a particular answer, skewing your data. Instead, use neutral language to allow respondents to answer honestly.

An example:

Stick to one topic per question

Avoid double-barreled questions, which ask about more than one topic in a single question. This can confuse respondents and yield unclear results. Instead, focus on one single topic per question.

An example:

Keep open-ended survey questions to a minimum

Too many open-ended questions can cause respondents’ fatigue and increase the risk of survey abandonment. Too many open-ended questions also make it harder (and/or longer) for you to analyse the data afterwards. You should use open-ended questions sparingly to gather qualitative insights that closed questions alone might miss.

Give respondents an out

Not everyone will have an answer to your survey questions, or wish to share one. Include survey responses like “Prefer not to say” or “Don’t know” for questions where respondents may not have an answer. This helps prevent respondents from selecting inaccurate or random answers which would skew your results.

An example:

Consider the questions’ order

Order questions to encourage completion and accuracy. Start with easy, non-sensitive questions and gradually progress to more complex or personal topics, to help respondents feel comfortable and invested in the survey.

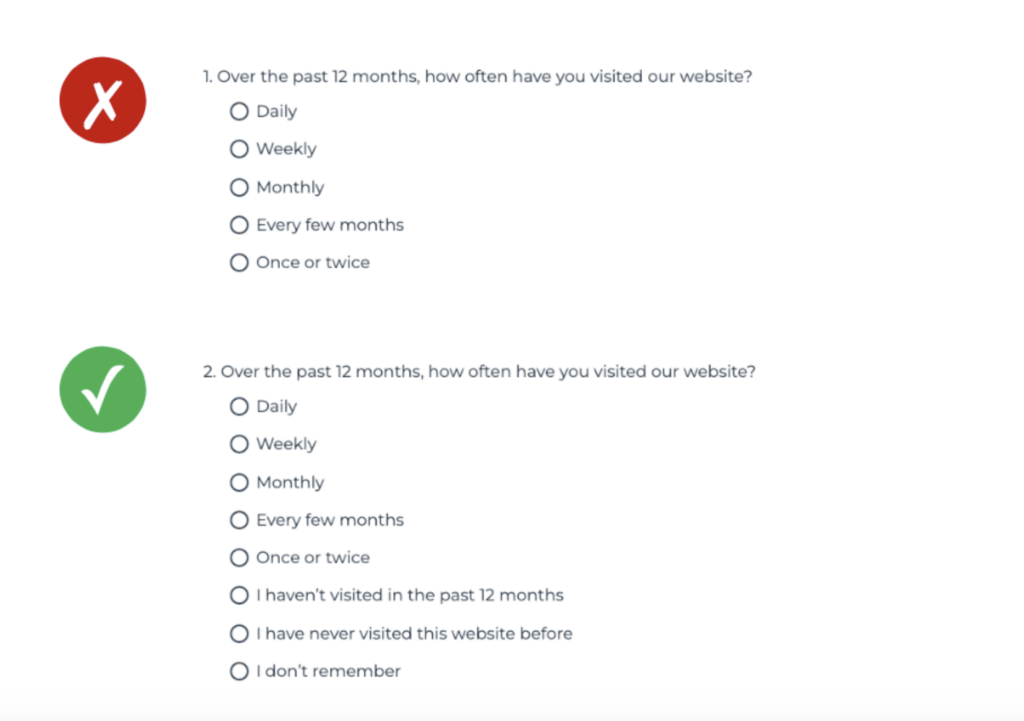

Ensure your survey responses are mutually exclusive

Response options should not overlap. Ensure that each option is distinct from one another so respondents can easily select the answer that best applies to them.

An example:

Going further

Want to dive deeper into survey design? We offer a comprehensive course where we cover various quantitative user research methods, with a significant focus on surveys. Sign up now to our Introduction to Quantitative User Research Methods course.

Alternatively, if you’re looking for support in designing, distributing, or analysing a survey, explore our survey services here or reach out to us.