Bunnyfoot helped this public sector client transform their automated accessibility audit findings into actionable change for users with disabilities

What is an accessibility audit

Let’s start by explaining what we mean by ‘accessibility audit’. At Bunnyfoot we know that the best way to support any type of user is to ask and observe real people about their real experiences. The ‘gold standard’ approach for research in digital accessibility would always be testing with end users.

Testing, particularly accommodating those with complex needs and more severe physical and cognitive impairments, can be resource and time intensive. If you are starting out on your journey toward digital accessibility, it helps to understand something about how easy it will be for a user to engage with your site beforehand, and make sure that your participants’ time and your own resources are used to the best effect.

Many teams will prefer to start with an evaluation of the site or service against established digital accessibility guidelines, including the WCAG (Web Content Accessibility Guidelines).

This methodology, called an ‘Accessibility Audit’, is a page or component-oriented investigation. It can be manual (done by a person) or automated (done by a tool) and the goal is to highlight areas of the site or service that would not meet the criteria for accessibility as stated in the guideline.

Our preferred approach is to conduct an ‘expert evaluation’ that can identify key areas of difficulty and the likely impact on different types of users.

Translating data into actionable change

Audits can be a really good method of understanding, in a broad sense, where to best focus efforts to support end users with disabilities. Most audits will provide a list of areas of the site that are not inline with the guidelines, and specify which guidelines are being met.

Some key challenges facing teams after conducting an audit include:

- What is the true impact of the issues for end users (and business goals)?

- How do we make things better?

- Where should we focus our efforts for the best effects?

While an audit is an objective measure of conformity to guidelines, for example it can tell you if skip navigation, in order to start moving effectively toward more accessible content a more human-oriented perspective is valuable. Like any user experience question, to support users with disabilities, you need to understand those users and their needs, to identify best practice and viable solutions, and to work with feasible and achievable roadmaps for change.

In this article, we will share with you one clients’ journey from an automated audit to a compliant plan for delivering accessibility on their public sector site.

The power of an evaluation

Our client, a public body providing information to individuals and businesses in an EU-legislated territory, came to us for support in meeting the legal requirements for accessibility under the existing legislation. Their development partners had conducted an automated audit of the website and their goal was to translate these findings into an actionable roadmap for change and establish an accessibility statement to support users in understanding where they were on that journey.

Faced with a multi page report without prioritisation, severity or user impact information, the team had already identified a number of areas where they could modify templates and resolve some issues site wide. While these measures could translate some of the ‘fails’ into ‘passes’ against the guidelines, they were keen to understand how the issues would impact users of the site and on various user journeys.

Our team conducted an Expert Accessibility Evaluation. Working with the content and development teams, we agreed the scope of the research, and discussed the types of users and their diverse needs and any existing changes that were already scheduled.

An expert evaluation puts users at the forefront, as we try to anticipate what kind of barriers someone might experience. We could be reviewing content to see if it is likely to support neurodivergent cognitive styles, or testing journeys with a specific assistive device, such as a screen reader.

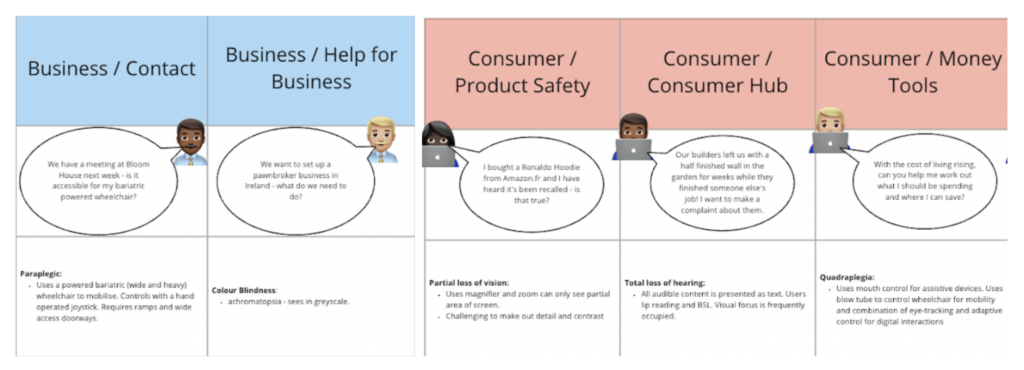

Users of our client’s site fall into two main categories: Businesses and Consumers. To support the team and help us to choose key content journeys and tasks, we devised some micro-personas to represent these user needs and represent a range of impairments.

Not every page and content element on a site is reviewed during an expert evaluation. Instead, we focus on the key areas that have the most significance or impact for users. In this evaluation, as the site relied on templates that were due to be replaced, we excluded sections using the obsolete templates too. Our results provided the teams with a sense of the severity of issues, and their impact on user outcomes, based on our experience of real world users and accessibility standards acquired over 25 years.

Our consultants worked their way through the site, capturing evidence and examples of areas of good practice and issues that are likely to produce obstacles for users with access needs. These include:

- Ability to skip to core content

- Adequate visual contrast

- Can be used with a keyboard and other input devices

- Semantically structured HTML

- Clear labelling of form and other page elements for assistive tech users

- Text alternatives are used appropriately

- Cognitively easy with clear content and structure

- Control of media and other content display

- Compatibility with assistive devices

- Non-visual experience is equivalent to visual display of content

- Reliance on mono-sensory characteristics

The resulting report didn’t just outline the recommendations and priorities for change, but also included persuasive and educational examples of the issues found. As with all our work, we shared these findings with the team in a debrief meeting where we could discuss the issues and next steps with the team including drafting an Accessibility Statement that aligns with legislative requirements and can be added to the site.

Beyond compliance

While legislative requirements like the European Accessibility Act, and relevant UK digital accessibility requirements are important instruments to ensure digital products and services are meeting the needs of people with disabilities, meeting the requirements of this legislation does not alway ensure accessible experiences.

Let us be realistic, universal accessibility is a challenging ambition and, for most content and delivery teams, the goal is improvement in that direction. Our evaluations will take you beyond compliance with legislation and conformance to guidelines and mean that you are able to meet the most important needs, understanding how to support the most vulnerable users on the most critical journeys and paths.

While an audit will identify pages and content that are not meeting guidelines, no users have ever told us that their biggest obstacle to using a site is “it doesn’t conform with WCAG SC 2.5.2 Pointer Cancellation”! Or has any stakeholder been motivated to act on accessibility issues by a list of ‘pass / fail’ outcomes. In some cases, relying on automated code audits can actually mask significant usability issues as they can’t provide an understanding of how the user will experience an issue. Let’s take ‘skip nav’: Version 2.2 of the Web Content Accessibility Guidelines that form the foundation of most accessibility legislation for websites includes a ‘Success Criteria’ SC 2.4.1: Bypass Blocks.

- “A mechanism is available to bypass blocks of content that are repeated on multiple Web pages.”

It goes on to suggest ways in which this can be done, relates specifically to users who are using a keyboard to navigate the site and is typically met by adding a link that is activated at the start of the page when you navigate using a keyboard that provides a ‘skip to content’ link that will bypass the repetitive or supplementary bits on the page (like the site navigation, search box, promo banners etc.) and allow user to access the ‘primary content’ of the page.

If we run an automated accessibility scan on a page, it can tell us if there is a ‘skip nav’ element present. It may even tell us what the link text for users is and the destination (in the form of a URL or inpage link address). So, if there is a link, it will pass this criteria.

What it can’t tell us is if that text and destination are useful to the user in this context, if they relate to the ‘primary content’ that is the requirement for passing the guideline. They also can’t tell us if they are working properly, for example if the page view follows the user’s location after using the link or if the text accurately describes the destination.

An audit is often an important first step on your journey toward improving accessibility. Just like our client, an expert review will provide a clearer picture of the impact of issues on end users. Many of the common issues will come to light and can be resolved before taking the site to your end users and gathering critical insight into what matters most to people with access needs.

Want to learn more?

Got questions? Sound familiar? We’d love to chat. Our teams have 25+ years experience delivering advice, research and design solutions for inclusive products. Contact us to learn more about our services in digital accessibility.

Or, if you are keen to learn more about how to deliver accessible digital services in your own teams, why not look at our Practical Accessibility training course.